Reduce CPU overhead when profiling

Profiling

The profiling section begins with great advice before your profiling even starts, then goes on to cover topics including repeatable profiling, reducing noise, and determining CPU or GPU-bound.

Using RGP

The Radeon™ GPU Profiler (RGP) is our powerful profiling tool, which you can use together with Unreal Engine. This section introduces RGP, explains how to use it with UE4, and uses an example of one of our optimization patches for profiling.

Built-in profiling tools

There are several built-in profiling tools in UE4 which can be used as a supplement to UE4. This section introduces these tools, and highlights some useful and relevant features.

Optimizing

Learn some valuable general advice for optimizing geometry, draw calls, and GPU execution – which includes some of the built-in tools and workflows. Find out about upscaling, including using FidelityFX Super Resolution with Unreal Engine.

Case study

At AMD, we maintain multiple teams with the primary focus of evaluating the performance of specific game titles or game engines on AMD hardware. These teams frequently use many of the methodologies presented here while evaluating UE4 products. This final, extensive section takes a guided look into the progression of some of those efforts.

Further reading

The first question when profiling in UE4 is what build configuration should be used. When profiling the GPU, you want CPU performance to be fast enough to stay out of the way during profiling. Debug builds should be avoided for profiling, of course, because the engine code is not compiled with optimization enabled.

Be aware that Development builds have higher CPU overhead than Test or Shipping. Still, it can be convenient to profile Development builds. To reduce CPU overhead in Development builds, you should turn off any unnecessary processing on the CPU side and avoid profiling in the editor. The editor can be made to run as the game using the -game command-line argument. The following command line shows an example of using -game and disabling CPU work that is not needed for profiling.

UE4Editor.exe ShooterGame -game -nosound -noailogging -noverifygcCopy

Consider using test builds when profiling

Test builds have lower overhead than Development, while still providing some developer functionality. Consider enabling STATS for Test builds in the engine’s Build.h file, so that UE4’s live GPU profiler ( stat GPU ) is available. Similarly, consider enabling ALLOW_PROFILEGPU_IN_TEST , so that ProfileGPU is available. More details will be given on stat GPU and ProfileGPU in the Built-In Profiling Tools section.

Test builds of a stand-alone executable require cooked content. If you need to iterate while profiling but want the lower CPU overhead of a Test build, consider using “cook on the fly” (COTF). For example, shader iteration is possible with COTF Test builds.

Perform last checks before profiling

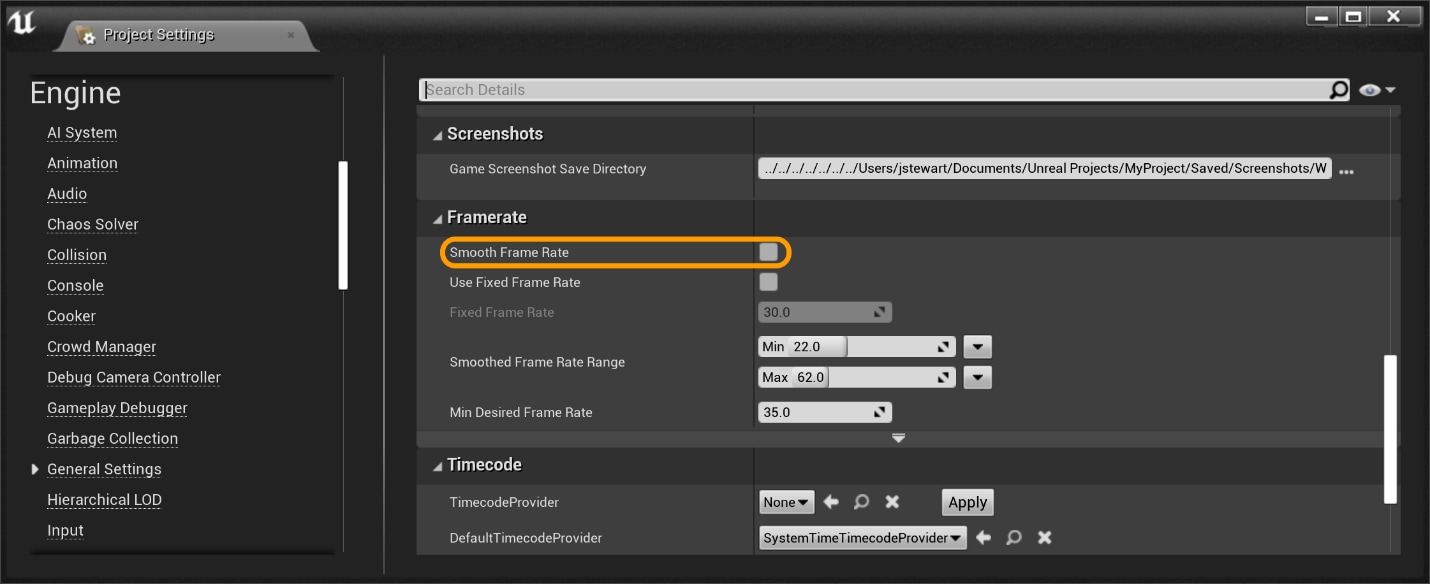

Now that your build is ready for profiling, you should sanity check a few things before getting started. First, ensure Frame Rate Smoothing is disabled. It is disabled by default starting in UE4.24, but it is good to double check. In the editor, you can check in Edit->Project Settings…->Engine –>General Settings->Framerate as shown below:

Alternatively, you can ensure bSmoothFrameRate is set to false everywhere it appears in Engine\Config\BaseEngine.ini and your project’s DefaultEngine.ini . You can also add bForceDisableFrameRateSmoothing=true to the [/Script/Engine.Engine] section of your project’s DefaultEngine.ini .

Next, turn off VSync. Once way to do this is with the -novsync command-line parameter. Adding this to our previous example gives the following:

UE4Editor.exe ShooterGame -game -nosound -noailogging -noverifygc -novsyncCopy

Lastly, run your build and verify your resolution in the log file. Resolution is, of course, one very important factor in GPU performance, and it is worth verifying that it is what you expect. Open the log file for your build and look for a line like the following:

LogRenderer: Reallocating scene render targets to support 2560x1440Copy

Repeatable profiling

This section contains tips for getting consistent results when profiling, so that you can better determine if a potential optimization actually improved performance.

Profiling from a single location

One way to profile is by going to the same location in your level.

A Player Start actor can be used to spawn directly to a specific location upon launch. This can be dragged into the scene through the editor.

If you have no way to change the scene in editor mode, or would like to teleport while in-game, then you can use the UCheatManager BugIt tools. Note: BugIt tools are only available in non-shipping builds.

To teleport using BugIt:

- First open up a console window. In-editor this is accessible via Window->Developer Tools->Output Log. If you are in-game, use console command

showlog. - Type

BugItinto the console. The first line in the output string should look like this:BugItGo x y z a b c. - This

BugItGocommand can be pasted into the console to teleport to the current location from anywhere.

Reducing noise in profiling results

When attempting to optimize the execution time of a workload, we need to be able to reliably measure the time a certain workload takes. These measurements should have as little noise as possible. Otherwise, we cannot tell whether it ran faster because of our optimization or because some random number generator decided to spawn fewer particles (for example).

UE4 has some built-in functionality to help with this. The -benchmark command-line argument causes UE4 to automatically change certain settings to be more friendly to profiling. The -deterministic argument causes the engine to use a fixed timestep and a fixed random seed. You can then use -fps to set the fixed timestep and -benchmarkseconds to have the engine automatically shut down after a fixed number of timesteps.

Below is an example of using these arguments with a Test build of the Infiltrator demo:

UE4Game-Win64-Test.exe "..\..\..\InfiltratorDemo\InfiltratorDemo.uproject" -nosound -noailogging -noverifygc -novsync -benchmark -benchmarkseconds=211 -fps=60 -deterministicCopy

In the above example, benchmarkseconds is not wall-clock seconds (unless every frame of the demo runs at exactly 60 fps). Rather, it runs 211×60=12,660 frames using a fixed timestep of 1/60=16.67 milliseconds. This means that, if you have your project set up to run a camera flythrough on startup, it will advance through the flythrough using fixed timesteps and a fixed random seed. It will then shutdown automatically after a fixed number of frames. This can be useful in gathering repeatable average frame time data for your level.

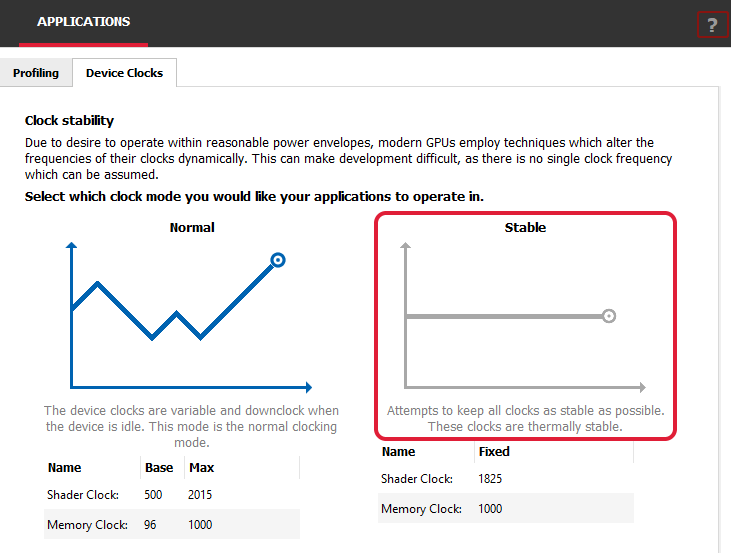

Another technique for helping reduce noise in profile results is to run with fixed clocks. Most GPUs have a default power management system that switches to a lower clock frequency when idle to save power. But this trades lower power consumption for performance and can introduce noise in our benchmarks, as the clocks may not scale the same way between runs of our application. You may fix the clocks on your GPU to reduce this variance. Many third-party tools exist, but the Radeon Developer Panel that comes with the Radeon GPU Profiler has a Device Clocks tab under Applications which can be used to set a stable clock on AMD RDNA™ GPUs, as shown below:

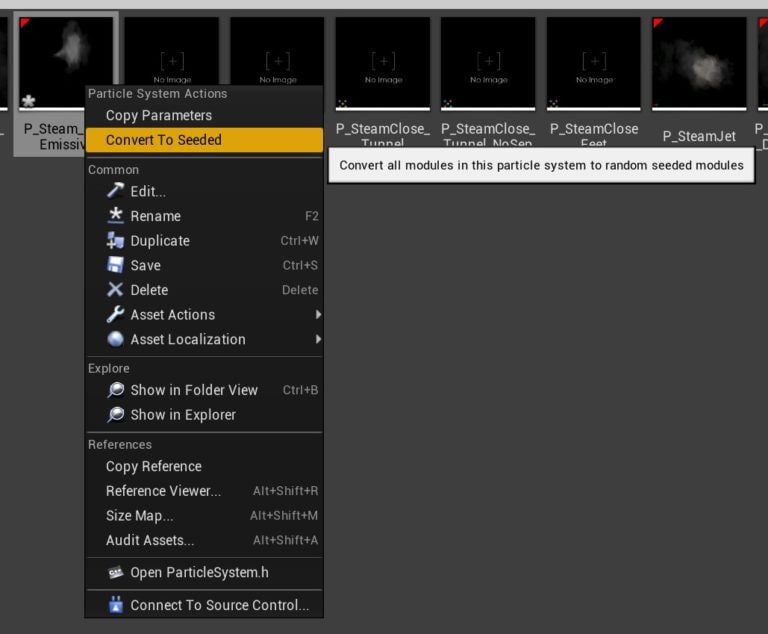

Getting back to reducing variability in UE4, you may find that some things do not obey the fixed random seed from the -deterministic command-line argument. This was the case for some particles in the Infiltrator demo. These particles were causing a noticeable amount of noise in our benchmarks.

The solution to reducing particle noise is to make the Random Number Generators used a fixed seed. This is how you make the particles deterministic in just 2 clicks:

1- Right click on the emitter of particles and then click on “Browse to Asset”

2- Once the emitter asset gets selected in the Content Browser, right click on it and select “Convert To Seeded”

That’s it! You can also select all your emitters in the Content Browser and convert them all at once. Once that has been done the noise will be much reduced and it should be very easy to evaluate your optimizations.

Note: If you are using Niagara particles, look for “Deterministic Random Number Generation in Niagara” in the official UE4.22 release page: https://www.unrealengine.com/en-US/blog/unreal-engine-4-22-released

Consider making your own test scene

Optimizing an effect requires trying many experiments, and every iteration takes time. We need to rebuild the game, cook the content, etc. UE4 features like cook on the fly (COTF) can help with this. But it can also be useful to isolate the effect or technique you are optimizing into a small application.

If only we could generate such an app easily! Fortunately, Unreal comes with a feature called Migrate for that. It extracts a certain asset with all its dependencies and will import it in any other project. In this case, for the sake of creating a small app, we would migrate the effect into an empty project.

Official documentation on migrating assets: https://docs.unrealengine.com/en-US/Engine/Content/Browser/UserGuide/Migrate/index.html

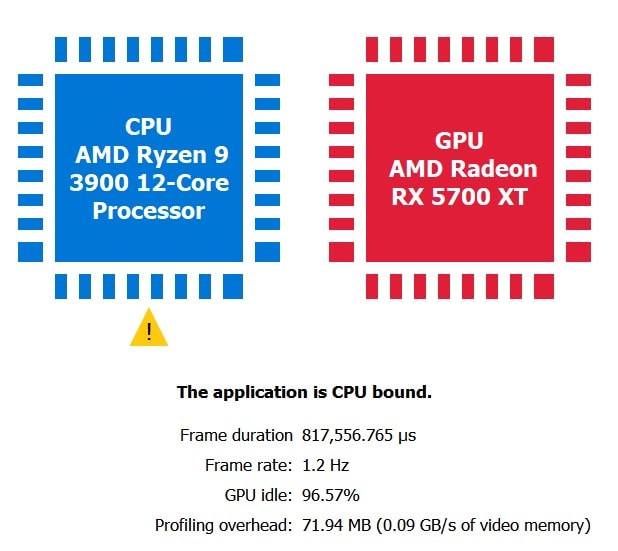

CPU-bound or GPU-bound?

When getting started with performance profiling in UE4, it is important to know where the primary performance bottlenecks are when running on the target platform. Depending on whether the bottleneck lies on the CPU or GPU, we may go in orthogonal directions with our performance analysis.

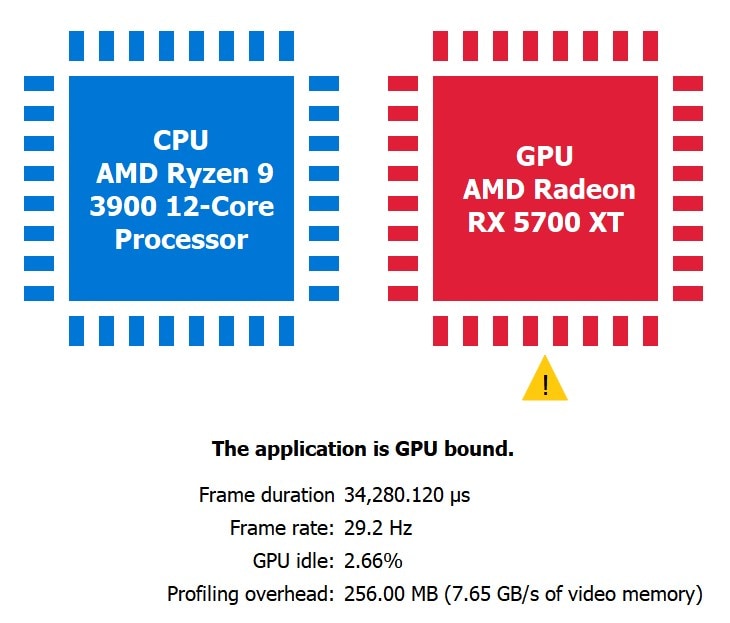

After taking a performance capture with the Radeon Developer Panel (RDP), these details are available in the Radeon GPU Profiler (RGP) from the Overview->Frame Summary view.

The following shows an extreme CPU-bound example, created by adding CPU busy work to UE4, followed by a GPU-bound scene.

A good sanity test to check if the application is indeed CPU bound is to scale up the render resolution. For example, if the GPU workload is increased by setting r.ScreenPercentage from 100 to 150 and RGP shows the same CPU bound result as before, that is a strong indication the app is thoroughly CPU bound.

Once we determine if we are GPU-bound or CPU-bound, we may decide to diagnose further with RGP (if we are GPU-bound) or switch to other tools like AMD μProf (if we are CPU-bound). As mentioned earlier, this version of the guide is focused on the GPU, so we will now discuss how to determine where the GPU’s time is being spent.

Using RGP

The Radeon™ GPU Profiler (RGP) is a very useful tool for profiling on RDNA GPUs. To capture with RGP using UE4, we must run UE4 on either the D3D12 RHI or the Vulkan RHI. This guide will use D3D12 for its examples. You can invoke the D3D12 RHI either by running the UE4 executable with the -d3d12 command-line argument or by changing the default RHI in the editor: Edit->Project Settings…->Platforms->Windows->Default RHI to DirectX 12.

Before capturing with RGP, uncomment the following line in ConsoleVariables.ini : D3D12.EmitRgpFrameMarkers=1 . This ensures that any UE4 code wrapped in a SCOPED_DRAW_EVENT macro appears as a useful marker in RGP.

Note: if you are using a Test build, either ALLOW_CHEAT_CVARS_IN_TEST in Build.h so that ConsoleVariables.ini will be used in Test builds or add a [ConsoleVariables] section to your project’s DefaultEngine.ini :

[ConsoleVariables]

D3D12.EmitRgpFrameMarkers=1Copy

RGP and UE4 example

This section uses one of our UE4 optimization patches on GPUOpen to demonstrate using RGP to profile. This example reduces frame time by 0.2ms (measured on Radeon 5700XT at 4K1). 0.2ms may not seem like much at first, but if you are targeting 60fps for your game, 0.2ms is roughly 1% of your 60-Hz frame budget.

If you have the patch and want to reproduce the results in this section, first use the console to disable the optimization: r.PostProcess.HistogramReduce.UseCS 0

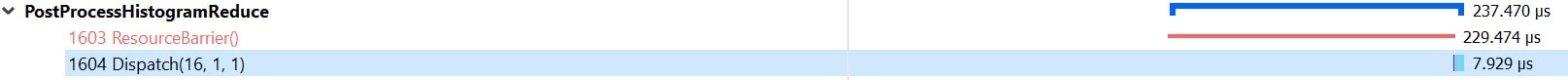

After taking a performance capture with RDP, these details are available in RGP from the Events->Event Timing view. If you are emitting RGP perf markers, you can quickly navigate to the marker that we are investigating by searching for “ PostProcessHistogramReduce ”.

We can see that the DrawIndexedInstanced() call takes 211us to complete. We can do better!

To inspect the details of the pixel shader running on the GPU, right-click on the draw call, select “View in Pipeline State” and click on PS in the pipeline.

The Information tab shows that our pixel shader is only running 1 wavefront and only taking up 32 threads of that wavefront. On GCN GPUs and above, this kind of GPU workload will execute in ‘partial waves’ which means the GPU is being underutilized.

The ISA tab will give us the exact shader instructions that are executed on GPU hardware as well as VGPR/SGPR occupancy. The ISA view is also useful for other optimizations like scalarization which are not covered here (https://flashypixels.wordpress.com/2018/11/10/intro-to-gpu-scalarization-part-1/)

Viewing the HLSL source ( PostProcessHistogramReduce.usf ) for this shader shows that there is a lengthy loop that we need to parallelize if we want to maximize the GPU hardware and eliminate any partial waves. We did this by switching to a compute shader and leveraging LDS (local data store/groupshared memory) – a hardware feature available on modern GPUs which support Shader Model 5.

Next, we can enable our optimization to see the performance impact: r.PostProcess.HistogramReduce.UseCS 1

After taking another performance capture with RDP and going back to the Event Timings view in RGP:

The time taken for the dispatch is 7us – for a whopping 96% performance uplift! The bulk of the time taken is now in the barrier which is unavoidable as our PostProcessHistogramReduce pass has a data dependency on the prior PostProcessHistogram pass.

The reason for this performance gain is executing shorter loops, leveraging LDS for reduction and using load instead of sample (image loads go through a fast path on RDNA). Going to the ISA view shows us the new LDS work happening within ds_read* and ds_write* instructions.

1 – System Configuration: Ryzen 9 3900, 32GB DDR4-3200, Windows 10, Radeon Software Adrenalin 2020 Edition 20.2.2, 3840×2160 resolution

Built-in profiling tools

This section covers the built-in UE4 profiling tools. These can serve as a supplement to profiling with RGP.

UE4 stat commands

A list of all stat commands is officially documented here: https://docs.unrealengine.com/en-US/Engine/Performance/StatCommands/index.html

The most important commands pruned from the above list:

stat fps: Unobtrusive view of frames per second (FPS) and ms per frame.stat unit: More in-depth version ofstat fps:- Frame: Total time to finish each frame, similar to ms per frame

- Game: C++ or Blueprint gameplay operation

- Draw: CPU render time

- GPU: GPU render time

- RHIT: RHI thread time, should be just under the current frame time

- DynRes: Shows the ratio of primary to secondary screen percentage, separately for viewport width and height (if dynamic resolution is enabled)

stat unitgraph: Shows the ‘stat unit’ data with a real-time line graph plot. Useful for detecting hitches in otherwise smooth gameplay.stat scenerendering: Good for identifying bottlenecks in the overall UE4 rendering pipeline. Examples: dynamic lights, translucency cost, draw call count, etc.stat gpu: Shows “live” per-pass timings. Useful for shader iteration and optimization. You may have to setr.GPUStatsEnabled 1for this to work. Developers with UE4 source code may zoom in on specific GPU work with theSCOPED_GPU_STATmacro.stat rhi: Shows memory counters, useful for debugging memory pressure scenarios.stat startfileandstat stopfile: Dumps all the real-time stat data within the start/stop duration to a.ue4statsfile, which can be opened in Unreal Frontend: https://docs.unrealengine.com/en-US/Engine/Deployment/UnrealFrontend/index.html

GPU Visualizer

The stat commands are great for a real-time view of performance, but suppose you find a GPU bottleneck in your scene and wish to dig deeper into a single-frame capture.

The ProfileGPU command allows you expand one frame’s GPU work in the GPU Visualizer, useful for cases that require detailed info from the engine.

Some examples:

- In

stat gpuwe see Translucency being slower thanBasePassby 1 ms. In GPU Visualizer, we then find a translucent mesh that takes nearly 1ms. We can choose to remove or optimize this mesh to balance time taken for opaque and translucent draws. - In

stat gpuwe see both Shadow Depths and Lights->ShadowedLights costing us frame time. In GPU Visualizer, we then identify an expensive dynamic light source by name. We can choose to make this a static light.

For the GUI version, set r.ProfileGPU.ShowUI to 1 before running ProfileGPU .

For more details, check out the official documentation: https://docs.unrealengine.com/en-US/Engine/Performance/GPU/index.html

We highly recommend using RGP in lieu of GPU Visualizer as your profiling workhorse for RDNA GPUs. RGP can have the same workflow as in the above examples. With RGP, you get in-depth GPU performance captures with more accurate timings and low-level ISA analysis.

FPS Chart

Useful for benchmarking over a long period of time, getting stat unit times over the duration. Results get placed in a .csv file that can be plotted in the CSVToSVG Tool: https://docs.unrealengine.com/en-US/Engine/Performance/CSVToSVG/index.html

Console command to toggle: startfpschart and stopfpschart

Optimizing in Unreal Engine 4

This section presents general advice for the optimization of your content and shaders in UE4.

Optimize your geometry

Good optimization practice means avoiding over-tessellating geometry that produces small triangles in screen space; in general, avoid tiny triangles. This means that keeping your geometry in check is an important factor in meeting your performance targets. The Wireframe view mode accessible through the editor is a great first look at the geometric complexity of objects in your scene. Note that heavy translucency can slow down the Wireframe view mode and makes it look more crowded and less helpful. RenderDoc also shows wireframe.

LODs in UE4 are an important tool to avoid lots of tiny triangles when meshes are viewed at a distance. Refer to the official documentation for details: https://docs.unrealengine.com/en-US/Engine/Content/Types/StaticMeshes/HowTo/LODs/index.html

Optimize your draw calls

UE4 calculates scene visibility to cull objects that will not appear in the final image of the frame. However, if the post-culled scene still contains thousands of objects, then draw calls can become a performance issue. Even if we render meshes with low polygon count, if there are too many draw calls, it can become the primary performance bottleneck because of the CPU side cost associated with setting up each draw call for the GPU. Both UE4 and the GPU driver do work per draw call.

However, reducing draw calls is a balancing act. If you decide to reduce draw calls by using few larger meshes instead of many small ones, you lose the culling granularity that you get from smaller models.

We recommend using at least version 4.22 of Unreal Engine, to get the mesh drawing refactor with auto-instancing. See the GDC 2019 presentation from Epic for more details: https://www.youtube.com/watch?v=qx1c190aGhs

UE4’s Hierarchical Level of Detail (HLOD) system can replace several static meshes with a single mesh at a distance, to help reduce draw calls. See the official documentation for details: https://docs.unrealengine.com/en-US/Engine/HLOD/index.html

The ‘ stat scenerendering ’ command can be used to check the draw call count for your scene.

Optimize your GPU execution

We covered one example of optimizing GPU execution in the RGP and UE4 Example section earlier in the guide. We will cover another in the GPUOpen UE4 Optimization Case Study section. This section covers some built-in tools and workflows to help optimize GPU execution in UE4.

Optimization viewmodes

The UE4 editor has many visualization tools to aid with debugging. The most notable of these for debugging performance would be the Optimization Viewmodes. For an overview of the different modes, please see the official documentation: https://docs.unrealengine.com/en-US/Engine/UI/LevelEditor/Viewports/ViewModes/index.html

- If your scene contains multiple light sources with large source radius, then you might want to check Light Complexity to optimize overlapping lights.

- For scenes with static lighting, Lightmap Density would show the texel resolution used during baked lighting for an Actor. If you have a small object in the scene which takes up a small pixel area on the screen and that shows as red (high density), then it could be optimized. Clicking on the Actor, and change Lighting->Overriden Light Map Res to a lower value. The actual performance cost here is in the memory usage of the Lightmap and/or Shadowmap (depending on the light type used).

- Static Shadowmaps can only be allowed 4 contributing lights per texel. Any excess stationary lights that overcontribute to a region can be visualized in Stationary Light Overlap. UE4 enforces this by changing excess stationary lights to movable, marking them with a red cross and visualizing the overlap as a red region in Stationary Light Overlap. Since movable lights are very expensive, we can optimize excess lights by reducing radius or turning off static shadowing in Light->Cast Shadows.

- Shader Complexity uses a static analysis of the number of shader instructions executed per pixel to determine pixel cost. As a supplement to this, we recommend Events->Wavefront occupancy and Overview->Most expensive events views in RGP to get a summary of where your frame time is going.

- Scenes with heavy translucency, foliage, or particle effects will render pixels with high values in Overdraw. If the average overdraw (marked by OD in the color ramp) stays at high values for most of your application then further optimization may be required.

Optimize your screen percentage

Upscaling is a technique in which an application renders most of its frame at reduced resolution. Then, near the end of the frame, the application enlarges the rendered image to the desired output resolution. Rendering at lower resolution costs fewer shaded pixels while simultaneously reducing memory demands. This can result in significant performance savings without introducing much risk into your development or content pipelines. The trade-off is that rendering at a lower resolution can lead to less detailed final images. Various upscaling methods have been developed to minimize the reduction in quality from rendering at a lower resolution. Stock UE4 implements two separate upscaling algorithms, one spatial and one temporal, which are both controlled by the console variable r.ScreenPercentage.

Assigning any value to this console variable which is greater than 0.0 and less than 100.0 will automatically configure UE4 to upscale your project. The specified value is treated as a percentage multiplier of target resolution. For example, if your target resolution is 2560×1440 and r.ScreenPercentage is set to 75.0, most of your frame will render at 1920×1080, only upscaling to 2560×1440 near the end of the frame.

UE4 Spatial Upscaling

Spatial Upscaling is the default upscaling method employed by UE4. Spatial upscalers are minimally invasive and directly expand a single image without requiring any additional information or context. In this respect, using a spatial upscaler is a lot like resizing a picture in an image editing tool. UE4’s Spatial Upscaler has exceptional performance characteristics but its quality impact may be significant when using lower resolutions compared to alternative upscaling solutions.

UE4 Temporal Upscaling

Temporal Upscaling is not UE4’s default method of upscaling. In order to configure UE4 to use Temporal Upscaling, assign the value of 1 to the console variable r.TemporalAA.Upsampling. Temporal Upscaling in UE4 is applied as part of the Temporal Anti-Aliasing algorithm (TAA), and as a result, TAA must be enabled for Temporal Upscaling to be used. Temporal upscalers are more complex than their spatial counterparts. At a high level, Temporal upscaling typically renders every frame at a different sub-pixel offset, and then combines previous frames together to form an upscaled final image.

Not only is the algorithm itself more complex, but motion vectors must be present and tracked across multiple frames to achieve high quality results. Additionally, UE4 Temporal Upscaling cannot render as much of the frame at reduced resolutions as Spatial Upscaling can, because UE4 Temporal Upscaling must always occur during the application of TAA. Therefore some post-process operations will still render at full resolution even when UE4 Temporal Upscaling is employed. UE4 Temporal Upscaler produces sizeable performance gains over native-resolution rendering, but its complexity may make it more expensive than alternative upscaling solutions. However, the quality of resulting images can be very high.

FidelityFX Super Resolution 1.0

Researchers at AMD have developed an exciting additional option for upscaling in UE4. We call it FidelityFX Super Resolution 1.0, or FSR 1.0 for short. FSR 1.0 uses a collection of cutting-edge algorithms with a particular emphasis on creating high-quality edges, giving large performance improvements compared to rendering at native resolution directly. FSR 1.0 enables “practical performance” for costly render operations, such as hardware ray tracing. FSR 1.0 is a spatial upscaler, which means it has the same minimally invasive nature and exceptional performance characteristics as UE4’s Spatial Upscaler.

It is recommended to expose quality presets for FSR 1.0 as follows:

- Ultra Quality (77% screen percentage)

- Quality (67% screen percentage)

- Balanced (59% screen percentage)

- Performance (50% screen percentage)

FSR 1.0 will run on a wide variety of GPUs and is completely open source on GitHub. FSR 1.0 can be integrated into your UE4.27.1 (or higher) project with our FSR plugin, or by applying this FSR patch (patch requires you to be a UE-registered developer) for earlier versions. Check out FSR on GPUOpen for more information.

GPUOpen UE4 optimization case study

At AMD, we maintain multiple teams with the primary focus of evaluating the performance of specific game titles or game engines on AMD hardware. These teams frequently use many of the methodologies presented in this document while evaluating UE4 products. In this section, we will take a guided look into the progression of some of those efforts. Easy integrations of the results from optimizations discussed in this section (and more) are all available here.

Case Study 1: Use one triangle for fullscreen draws

Step 1 – Identify the optimization target

The life cycle of this optimization begins while evaluating a Radeon GPU Profiler (RGP) trace of Unreal Engine running on DX12. Before beginning any evaluation in RGP, ensure that UE4 is configured to emit RGP frame markers. This dramatically simplifies the task of navigating the sheer volume of data in RGP profiles and can be accomplished for DX12 by assigning the CVar value D3D12.EmitRgpFrameMarkers=1.

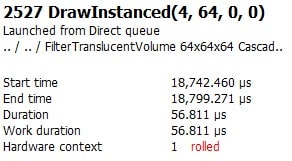

Under the OVERVIEW tab in RGP, there is a panel which presents a sorted listing of Most expensive events. In one capture, two specific events – right next to each other under this sorting – stand out as an optimization candidate:

This pair stands out for a few specific reasons:

- The same Event with identical User Event information and very similar Duration happens twice back to back during the frame (Event ID 2527 vs Event ID 2529). This suggests these two Events are closely related to each other; they may even be the exact same operation against different inputs or outputs. If this is true, any savings yielded while optimizing the first event could also impact the second… scaling our efforts by a factor of 2.

- There are 64 instances of this draw being rendered. This suggests any savings yielded optimizing 1 such draw could also impact the other 63 instances, scaling our efforts by an additional factor of 64.

- Even a sub-microsecond improvement to each individual draw could add up very quickly if scaled 128 times, so let’s zoom in on this.

Step 2 – Understand the optimization target

Before we begin attempting to optimize this event, we should pause to make sure we understand both what it does and how it does it. We will accomplish this by inspecting the operation in Renderdoc. Before beginning any debugging exercise in Renderdoc, ensure that UE4 is configured to preserve shader debug information. If this information is available, Renderdoc will provide much more context about the execution of a given event. You can accomplish this by assigning the CVar value r.Shaders.KeepDebugInfo=1 . If you are turning this value on for the first time, be prepared to wait for completion of a lengthy shader compilation job the next time you launch Unreal.

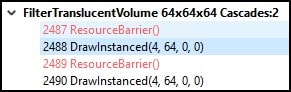

In the Most expensive events panel of RGP, right-clicking the desired event and selecting View in Event timing opens the EVENTS tab Event timing panel, and transports you directly to the targeted event.

In this view, we can see that the event is part of a debug region titled FilterTranslucentVolume, and we will use this information to locate this event in Renderdoc moving forward.

In a Renderdoc capture of this scene, searching the Event Browser for FilterTranslucentVolume directly transports us to the Renderdoc representation of the targeted event.

With debug information preserved, we can directly see the full HLSL source with all relevant #include s inline and all relevant #if s already evaluated for each stage of the rendering pipeline by clicking the View button at the top of the stage data panel in the Pipeline State tab. We can also see the entry point in that source file for the target shader or view the raw disassembly if needed.

Inspection of the source files associated with each stage of this pipeline demonstrates that this event is reading pixels from a 64x64x64 3D texture and averaging the results into another 64x64x64 3D texture, one slice at a time. The Pixel Shader selects an appropriate slice within both input and output textures based on the Instance ID of the current draw. The Vertex and Geometry Shaders do no matrix manipulation against input vertices.

The combination of a 4 vertex Draw with a Vertex Shader that does no matrix manipulation suggests that this operation is simply drawing a front-facing quad as a triangle strip. The additional context of what the Pixel Shader is doing suggests that quad is probably intended to cover the entire 64×64 region of a single slice of the output 3D texture. Inspection of the Input Assembler stage in Renderdoc – specifically the Mesh View visualization tool – verifies these expectations for the first instance drawn by this event. The 64×64 pink region in every slice of the Highlight Drawcall overlay in the Texture Viewer tab corroborates that information for each other instance, and across the entire output 3D texture space.

Step 3 – Define the optimization plan

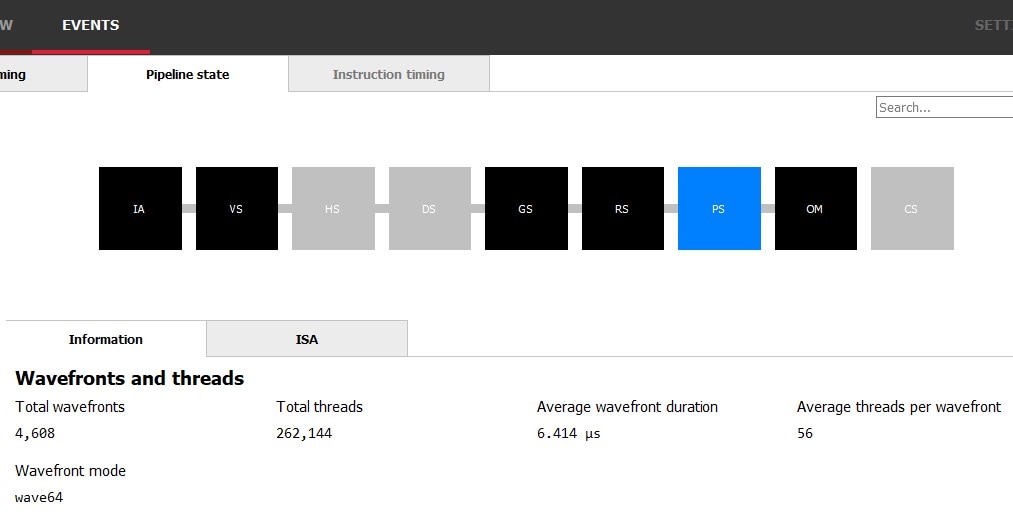

Armed with this information, we can finally begin trying to optimize! We start this process by returning to the Event timing panel in RGP. Selecting the targeted Event and then swapping to the Pipeline State tab at the top of the panel provides additional information about this draw. Selecting the PS pipeline stage brings up additional information specifically about Pixel workloads.

Here we can see a total of 262,144 unique pixel shader invocations, which aligns with our expectations from inspecting the event in Renderdoc: every pixel in a 64x64x64 3D texture should have been output, and 64x64x64 = 262,144. The other information presented here could be concerning. AMD GPUs organize work into related groups called wavefronts. The wavefront mode for this event is wave64, and so under ideal circumstances there should be 64 threads per wavefront; we only realized 56 of those threads in the average wavefront during this event. This reality means we may be wasting possible cycles and represents a potential opportunity for optimization. Whether that potential can be manifest depends entirely on why we’re failing to realize 64 threads per wavefront.

At a high level, the organization of related work into wavefronts normally produces highly efficient execution through SIMD. In this scenario, that organization also comes with a drawback. Because the quad is rendered using two separate triangles, separate wavefronts are generated for the pixel work associated with each of those triangles. Some of the pixels near the boundary separating those triangles end up being organized into partial wavefronts, in which some of the threads are simply disabled because they represent pixels that are outside of the active triangle. The relatively small dimensions of each individual 64×64 output region exacerbates this phenomenon as a percentage of overall work. Entire documents have been produced detailing causes behind this phenomenon. We encourage you to read some of the AMD whitepapers for additional information!

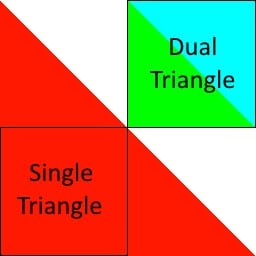

From here, the solution to improve the efficiency of organizing existing pixel work is relatively straightforward. Since the problem results from the existence of a large pixel-space boundary between two triangles, eliminating that boundary eliminates the problem. While inspecting this event in Renderdoc, we learned that the entire 64×64 region represented by each slice of the render target is being output. Pixels that would fall outside that region are implicitly discarded before ever reaching the Pixel Shader stage of the rendering pipeline, and we can take advantage of this fact to reconsider how we cover this region. The image to the right shows how we could fully cover this region (and then some) with a single triangle. While the exact coverage afforded by the Dual Triangle representation seems more prudent at first, the data we’ve collected so far suggests that eliminating the boundary between those two triangles may ultimately be more efficient.

We can also theorize an additional possible benefit to this refactor. During inspection, we noted that the Pixel Shader is largely sampling pre-generated textures and averaging the results into the output render target. There is very little computational work going on here, and most of the expense for this kernel should present as waiting on memory accesses. Eliminating the second triangle will change the rasterization pattern of this quad, because individually generated wavefronts are no longer restricted to covering a single triangle – half of the input and output regions. Removing this condition allows wavefronts to operate on entire blocks of compressed memory or entire scanlines of uncompressed memory in unimpeded succession. This is likely to improve the benefits yielded by spatial caching on our memory accesses, and we expect we may see improvement in overall cache utilization as a result.

Step 4 – Implement the optimization plan

With the optimization plan in place, it’s time to implement. We again use the debug region from both RGP and Renderdoc to help us identify the appropriate location to make this refactor in Unreal Engine source. Searching the source code for FilterTranslucentVolume produces a handful of results, including one which invokes the macro SCOPED_DRAW_EVENTF . That macro is producing the debug markers we see in both Renderdoc and RGP; we’ve found our entry point. Inspecting the source to that function eventually takes us to the function RasterizeToVolumeTexture in the file VolumeRendering.cpp , where we find two things:

- The call to

SetStreamSource, using the vertex buffer from the objectGVolumeRasterizeVertexBuffer. We’ll want to evaluate how this object gets initialized and replace that initialization with the vertices for our single, larger triangle. - The call to

DrawPrimitive. When we’ve replaced the quad composed of two triangles with a single triangle, we’ll likely need to tellDrawPrimitiveto reduce the number of primitives drawn from 2 to 1 as well.

With our foot now firmly in the door, the remainder of this implementation exercise is carried out in the patch available here.

Step 5 – Make sure the optimized implementation works

It is important to ensure the optimization works completely and correctly before you start measuring performance gains. Skipping this step frequently results in apparent significant wins that are ultimately undercut when functional holes are later realized. Renderdoc remains the tool of choice here. Since we’ve already spent time evaluating and understanding the original implementation, knowing what to review in Renderdoc post-optimization is straightforward. We haven’t touched any of the shaders, so we don’t expect problems there. We do need to ensure that the output of our new triangles completely covers every slice of the 3D texture, survives backface culling, and has appropriate texture coordinates. The Mesh View tool and Texture Viewer Overlays in Renderdoc make quick work of this validation.

Step 6 – Analyze performance results

The first and most important result to evaluate is that we see performance savings on the event in question. This task is easily handled by returning to the Event timing panel of the EVENTS tab in RGP and clicking the Show details button in the top right corner of the panel. This expands the details pane, which includes the overall duration of the individual task. In this case, we can see a reduction of about 20us.

Before – dual triangle quad (4 vertices)

After – single triangle quad (3 vertices)

Because we previously identified that this exact event seems to happen twice, we can also easily demonstrate that we see those 20us savings twice. Great!

There is also value in ensuring we correctly understood why things have gotten faster. Sometimes, additional savings come out of this exercise when you realize you still haven’t fixed the issue you set out to fix. We will use both RGP and Renderdoc in this evaluation. Since we have already used RGP to see the inefficient thread utilization of our wavefronts, it is easy to return to that view in the Pipeline state panel of the EVENTS tab and validate the average threads per wavefront of Pixel Shader work has increased. They have – all the way 64, and this is exactly what we wanted to see. This indicates we have successfully eliminated all partial wavefronts from this event as a result of this operation.

We also see a hint here that our theorized improvement to cache utilization may have borne fruit. In addition to spawning fewer and more efficiently organized wavefronts, the average wavefront duration has simultaneously decreased from 6.414us to 5.815us. However, this data is anecdotal and does not prove anything. In order to get proof that cache utilization improved, we can inspect AMD-specific performance counters.

Unfortunately, as of the time of this writing RGP does not yet support streaming performance counters. However, latest versions of Renderdoc do, including AMD-specific performance counters! We can inspect this information in a Renderdoc capture of our scene by selecting Window > Performance Counter Viewer to open the associated tab. Clicking the Capture counters button opens a dialog which includes an AMD dropdown, from which we can select cache hit and miss counters for all cache levels.

After clicking the Sample counters button, Renderdoc will re-render the scene with counters enabled. Next to the Capture counters button in the Performance Counter Viewer tab is a button that says Sync Views. Ensure that Sync Views is enabled, and then select the targeted event in the Event Browser. If you already had the targeted event selected, select a different event and then simply go back. The Performance Counter Viewer tab will automatically scroll to and highlight the row containing counters for the targeted event.

By combining the cache hit counts and cache miss counts, we can produce representations of effective cache utilization as a percentage of cache requests which were successful. That exercise was completed in excel for this optimization and the raw data is presented here:

| Dual-Tri | Single-Tri | DT Hit | DT Miss | ST Hit | ST Miss | |

|---|---|---|---|---|---|---|

| L0 | 95.60% | 99.29% | 4426426 | 203518 | 3034589 | 21563 |

| L1 | 56.52% | 23.21% | 37413 | 28777 | 5092 | 16850 |

| L2 | 71.60% | 73.37% | 83457 | 33095 | 49350 | 17908 |

| Overall | 94.49% | 98.21% | 4547296 | 265390 | 3089031 | 56321 |

These results demonstrate significant overall improvement and show hugely successful L0 utilization after applying this optimization. This analysis of performance results indicates success across all criteria.

Step 7 – Make sure nothing else is broken

Unreal Engine is a large and complex code base, and sometimes it can be difficult to make targeted changes that don’t have side effects. Testing paradigms invariably change from project to project and from optimization to optimization, but a few tips to keep in mind:

- Use a debugger. Breakpoints can easily provide you with callstacks and context any time anyone invokes code that you’ve changed. Make sure you expect it every time it happens.

- Break down all identified invocations in Renderdoc using the same strategies outlined in Step 2.

- Test more than one scene, and keep in mind that if your project is dynamic… testing still images may be insufficient to catch all issues.

- Static analysis may be required depending on your use case and target audience. It’s the hardest answer, but sometimes it’s also the best.

- Sometimes “close enough” is the same thing as “good enough”, particularly if you aren’t distributing your changes externally. If your change produces artifacts to intermediate values in Renderdoc that are not noticeable in the final scene, you may not need to fix that.

- Sometimes an optimization is not intended to produce identical results as the original algorithm. Sometimes a slightly worse but significantly faster answer is a good tradeoff, especially for video games.

- Computing the Mean Squared Error (MSE) is a valuable tool for objectively quantifying artifacts and making sound decisions about error tolerance. AMD Engineers frequently use Compressonator to help with this kind of analysis.

Executing this step properly is always important and can sometimes increase the relative value of an optimization. While performing this exercise on the single-triangle optimization discussed here, we identified many draws beyond the original pair that were positively affected by this optimization. Overall expected savings increased accordingly.